TET-GAN: Text Effects Transfer via Stylization and Destylization

Figure 1. Text effects transfer results produced by TET-GAN.

Abstract

Text effects transfer technology automatically makes the text dramatically more impressive. However, previous style transfer methods either study the model for general style, which cannot handle the highly-structured text effects along the glyph, or require manual design of subtle matching criteria for text effects. In this paper, we focus on the use of the powerful representation abilities of deep neural features for text effects transfer. For this purpose, we propose a novel Texture Effects Transfer GAN (TET-GAN), which consists of a stylization subnetwork and a destylization subnetwork. The key idea is to train our network to accomplish both the objective of style transfer and style removal, so that it can learn to disentangle and recombine the content and style features of text effects images. To support the training of our network, we propose a new text effects dataset with as much as 64 professionally designed styles on 837 characters. We show that the disentangled feature representations enable us to transfer or remove all these styles on arbitrary glyphs using one network. Furthermore, the flexible network design empowers TET-GAN to efficiently extend to a new text style via one-shot learning where only one example is required. We demonstrate the superiority of the proposed method in generating high-quality stylized text over the state-of-the-art methods.

Framework

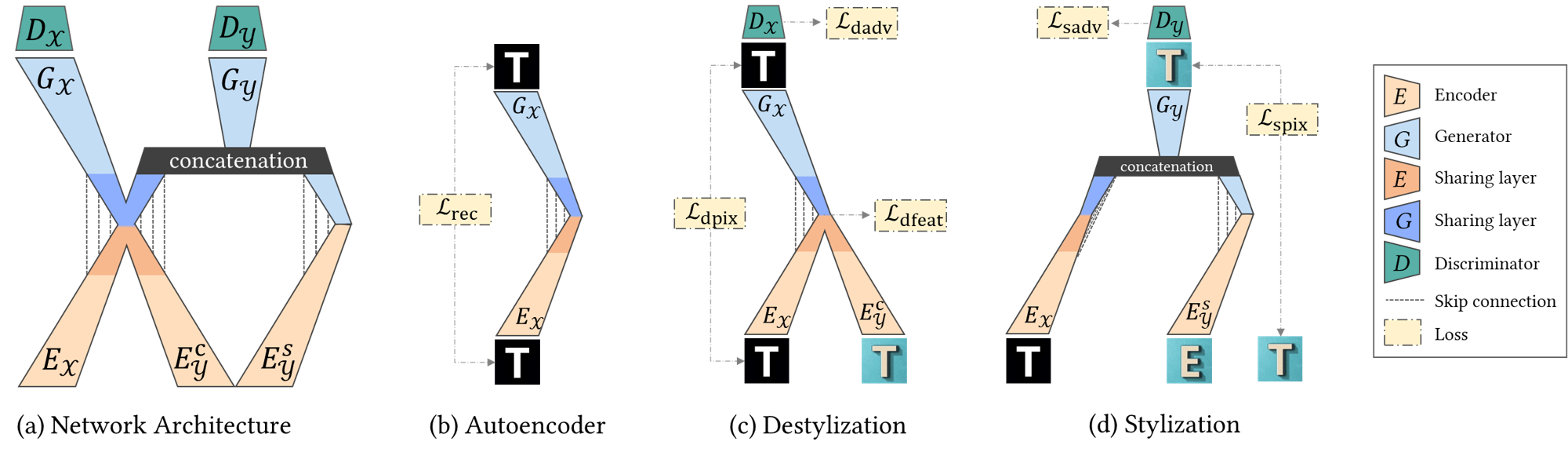

Figure 2. The TET-GAN architecture. (a) An overview of TET-GAN architecture. Our network is trained via three objectives of autoencoder, destylization and stylization. (b) Glyph autoencoder to learn content features. (c) Destylization by disentangling content features from text effect images. (d) Stylization by combining content and style features.

Dataset

Figure 3. An overview of our text effects dataset. Our dataset including 64 text effects each with 775 Chinese characters, 52 English letters and 10 Arabic numerals. Each text effects image has a size of 320*320 and is provided with its corresponding raw text image.

Resources

Citation

@inproceedings{Yang2019TETGAN, title={TET-GAN: Text Effects Transfer via Stylization and Destylization}, author={Yang, Shuai and Liu, Jiaying and Wang, Wenjing and Guo, Zongming}, booktitle={AAAI Conference on Artificial Intelligence}, year={2019} }

Selected Results

Figure 4. Comparison with state-of-the-art methods on various text effects. (a) Input example text effects with the target text in the lower-left corner. (b) Our destylization results. (c) Our stylization results. (d) pix2pix-cGAN [1]. (e) StarGAN [2]. (f) T-Effect [3]. (g) Neural Doodles [4]. (h) Neural Style Transfer [5].

Figure 5. Text effects interpolation.

Reference

[1] P. Isola, J. Y. Zhu, T. Zhou, and A. A. Efros. Imageto-image translation with conditional adversarial networks. CVPR 2017.

[2] Y. Choi, M. Choi, M. Kim, J. W. Ha, S. Kim, and J. Choo. Stargan: Unified generative adversarial networks for multi-domain image-to-image translation. CVPR 2018.

[3] S. Yang, J. Liu, Z. Lian, and Z. Guo. Awesome typography: Statistics-based text effects transfer. CVPR 2017.

[4] A. J. Champandard. Semantic style transfer and turning two-bit doodles into fine artworks. arXiv:1603.01768, 2016

[5] L. A. Gatys, A. S. Ecker, and M. Bethge. Image style transfer using convolutional neural networks. CVPR 2016.